Azure Face, Part 4: Is Doug Happy?

When looking at a photo, we can often tell how happy a person is, or you can at least make a good guess. But determining if a person is happy based on facial expressions is no longer just for humans. Azure’s Face API also supports letting Azure make that guess. Let’s dive in and see how it works.

In previous posts, we looked at how the Azure Face API can be used to determine how many people and even who is in a picture.

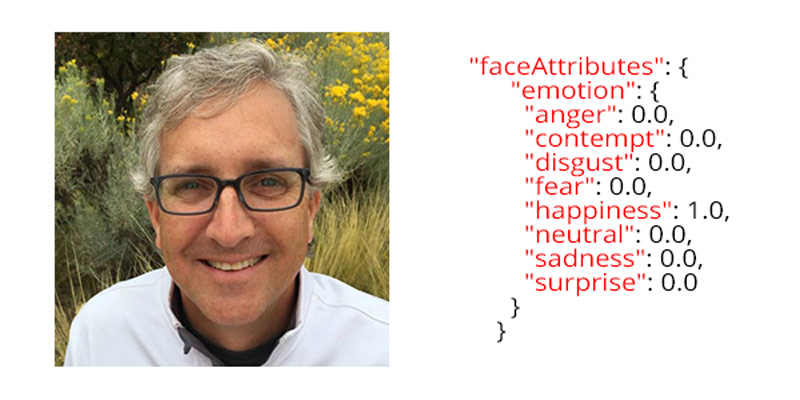

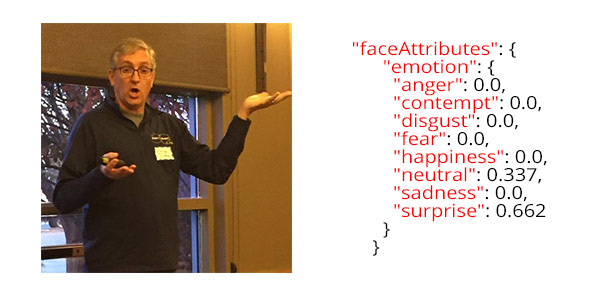

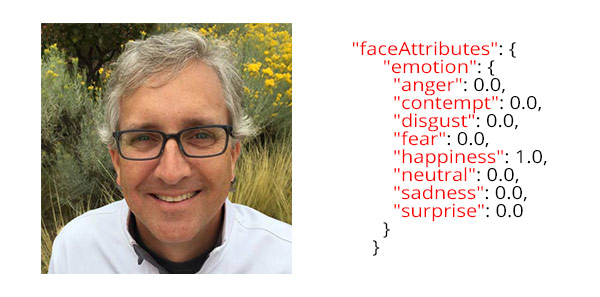

Now we are going to look at the emotional state of the faces within the picture. This will allow us to answer the question, is Doug happy? We’ll start by having our project look at these two images.

The code to accomplish this is pretty similar to other code we have seen with the Azure Face API. Determining the emotional state is actually much simpler of a setup than the comparing Doug and Bill in the previous post.

To have Azure take a stab at the emotional state of a person in a photo, you just need to call the DetectWithUrl method. This will give you back the location of the face (or faces) within an image.

Here’s how Azure assessed our two images.

You do have to pass what attributes about a face you want to be returned from the image. If you don’t ask for emotion data, you won’t get emotional data. You also ask for attributes such as age, gender, or even if your subject is wearing glasses. You can see this in the code below.