March Madness with Infer.NET

As a “joint venture” (over a few drinks) with our friend from Hudl, Kyle Deterding, we decided to use the Halo-style matchmaking algorithm to predict the NCAA tournament. The algorithm uses Microsoft Reasearch’s Infer.NET framework to handle the math and statistics that go into these models. Kyle wrote a great introduction post explaining it at a high level.

Infer.NET is a framework for running Bayesian inference in graphical models. It can also be used for probabilistic programming as shown in this video. Infer.NET can be used to solve many machine learning problems and was used to make the Kinect so awesome. We’re using it for a much simpler task, determining the actual skill of teams based on their performance in games. This is done the same way Halo 3 determines your skill. Check out the FAQ, a nice blog post, or the gritty details (Warning: This link may contain Math).

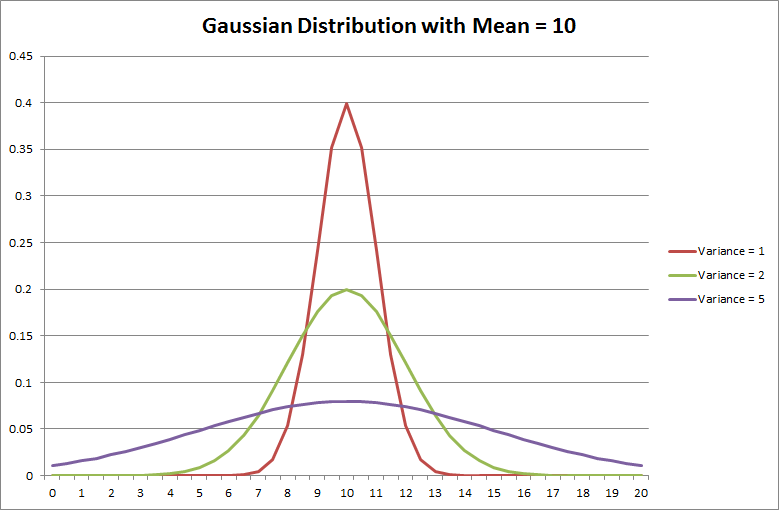

A key concept with this method is that your skill is represented with two variables:

- Mean: The predicted skill level

- Variance: The certainty of our prediction, higher variance means lower certainty

Your first game will have a very high variance because we have no idea what you should be ranked. As you play more and more games, your mean will change and your variance should get lower. We will eventually be able to say with high confidence (low variance) what your skill is. Here’s a statistics reminder for what different variances look like.

That makes sense, right? Well…everything except the “change” part.

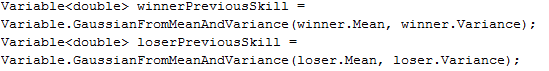

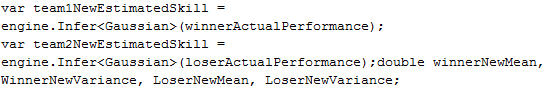

There are a couple assumptions that need to be made. First, the hidden variables are not known. That’s why they’re called “hidden”. Using this method, you assume there is a hidden, “true” skill that you are trying to find. You might not ever actually find it, but you use results from games to determine where it likely is. Second, it doesn’t matter how bad a team was beat. If you lose, you lose. Using this we can use Infer.NET to constrain certain attributes of the data. Let’s dive into some code. This is really all it comes down to. First we get our current skills (remember it’s a mean and variance).

The object Variable<double> simply means we know that winnerPreviousSkill is a double, but we aren’t sure what number it actually is. We use the Gaussian curve to approximate what this unknown value may be. It’s important to think of it as a variable in a math equation instead of a typical variable in code that you assign a single value. We create a distribution around that current skill of their actual skill based on their performance. Beta is essentially how far off of their actual skill they can play.

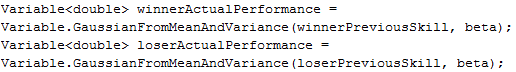

We don’t know anything about their actual skill, but we know that the winner should be better than the loser.

This is where the magic happens. The system averages all the possible performances for the players (weighted by their probabilities) and determines who would win. It will then arrive with a probability that one should win over the other. If team 1 has a much higher skill than team 2, it’s not very probable that team 2 wins. If their skills are close, the probability will head toward 50%. The engine then infers what the new skills should be based on the observation of the game (which team won the game). What’s this mean? If you beat someone much better than you, your rank will increase. If they were better than you, but had a high variance you won’t increase as much.

From there we just set the new skills and we’re done. On to the next game…

So how did our bracket do? We picked the national champion, UConn! It was our only correct pick for the Final Four. Only 6.6% of ESPN brackets had their national champion reach the Final Four. In fact, it was three times as likely (19%) that your champion didn’t make the Sweet 16 than it is they made the Final Four. Our bracket ended up better than 97% of brackets on ESPN.com. Our biggest oversights include having Indiana State reach the Elite Eight and Arizona lose in the first round. In the opening round 8/9 game of Butler and Old Dominion, the winner was good enough to reach the Final Four. We chose ODU. Missed an opportunity to look like geniuses on that one. One of the hardest parts of picking a tournament is that there are no second chances. Once a team loses, they are done. On the positive side, we correctly predicted four of the five top 4 seeds that failed to reach the Sweet 16, including #1 seed Pitt. We didn’t fill out a Division 3 bracket, but we had the final four from that tournament nailed.

I’ll add Kyle’s disclaimer: Just promise not to place any bets off of our results. We can’t take responsibility for any winnings (or losses) incurred because of these predictions.

Infer.NET is a pretty neat framework that allows you to do things with data a lot easier than with normal code. Try out the examples and see what you can come up with. Can you do better than us?