Getting Started with LM Studio

Many large language models (LLMs) provide features similar to ChatGPT. These custom models offer several benefits. For example, you can host them yourself, which could benefit some projects and environments. And there are models tuned for particular use cases, like coding or text processing. And then, some models are great for providing chatbot experiences in specific domains.

But isn’t running an LLM on your machine difficult? Sometimes, but that is where LM Studio comes into play.

LM Studio makes firing up a language model super easy. Before this tool, you were in for an experience (i.e., spending a lot of time). With LM Studio, it’s almost as easy as using ChatGPT.

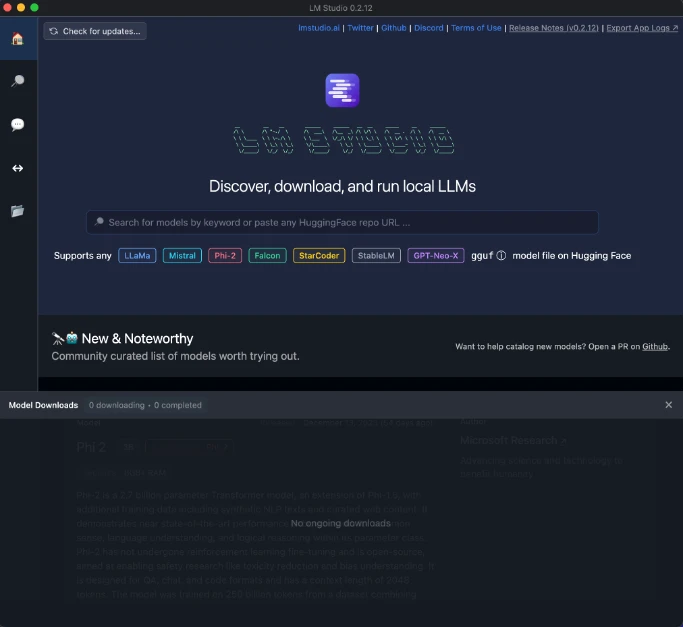

I started by using the Search tab to find and download a model.

I chose dolphin.

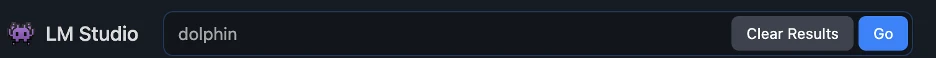

And I picked a random version that was small so I could quickly test it.

Then I used the built-in chat interface to interact with the model.

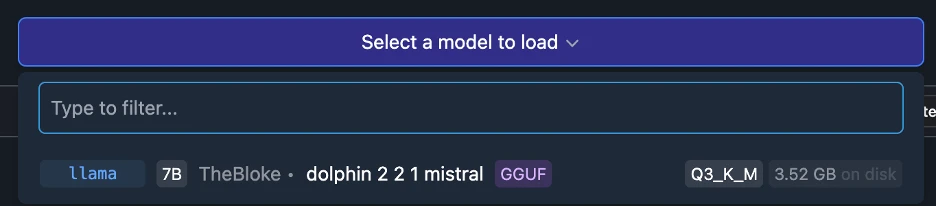

From the chat interface, I selected the model I just downloaded.

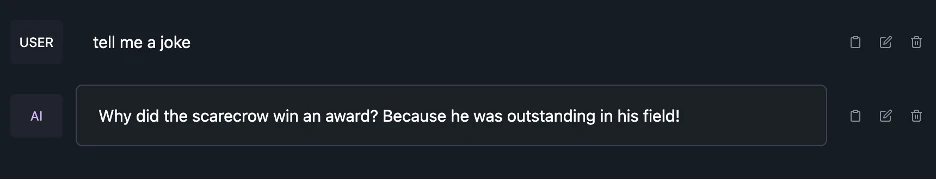

I asked the model to tell me a joke.

And it gave me a joke about a scarecrow. Ironically, this joke was the exact same joke I had seen online so these models must really like this joke (and scarecrows too).

That is it. LM Studio makes it easy to get a model up and running. Even better, these models run on your machine, not the cloud. I felt the experience with my M1 Max Mac was excellent. It’s easy to get started and features very good performance.

Find me on X (where I’m @chadmichel), and let me know if you’ve tried out LM Studio. I’d love to hear your thoughts.