The Danger of Incomplete Pictures, Part 1

I was recently re-introduced to one of my favorite essays, Why We Should Build Software Like We Build Houses, by Leslie Lamport. Leslie is one of several thought leaders within our industry who I really admire, both for his insights into the nature of software design as well as for his contributions in terms of the products and technologies he has developed (in Leslie’s case, TLA+ and the recent Cosmos DB project).

There were a couple of quotes in the article that really resonated with me and sparked a few thoughts on a series of blog posts I am writing.

The first was a quote from cartoonist Dick Guindon:

“Writing is nature’s way of letting you know how sloppy your thinking is.”

The second quote is:

“Some programmers argue that the analogy between specs and blueprints is flawed because programs aren’t like buildings. They think tearing down walls is hard but changing code is easy, so blueprints of programs aren’t necessary. Wrong! Changing code is hard – especially if we don’t want to introduce bugs.”

What I like about these two quotes is that they are consistent with my worldview of software design and development, while at the same time challenge the conventional wisdom of our industry at large. Since we at Don’t Panic Labs are trying to advance the state of the art in software design and development in our little corner of the world by “shaking things up a bit,” I thought they would be good touchstones from which to launch this series of essays.

The first quote relates to my firm belief that we do our deepest, most critical thinking when we are forced to articulate our thoughts. I never really did much writing in my engineering undergraduate education. It wasn’t until my first job as a Systems Engineer that I did much writing, and then I was doing it a lot. Most of it was concept documents, requirements specifications, and test plans. I learned early on from these experiences how different the mind works when you are expressing a concept or idea in words, as opposed to just thinking about it.

Our mental models tend to be more abstract, but when put down on paper they become more concrete. This process often reveals gaps in our understanding of the problem or solution – important gaps that are valuable to identify early on.

I also experienced this when I was teaching at the UNL Raikes school. It became quite apparent that the depth of understanding required to teach a concept is much more than to simply apply it. Somehow, our intuition and instincts (likely influenced by our past experiences) allow us to effectively apply concepts without the depth of understanding required to teach them. I suspect many of us have had that experience whenever someone asked us why we did something the way we did. We often react with “because I know it will work.”

The second quote relates to the situation we often find ourselves in at Don’t Panic Labs where we are expressing views and ideas that tend to run counter to the cultural norms and “conventional wisdom” in our industry. This quote specifically speaks to the notion that we can just start “slinging code” and come back to fix it later. I have discussed this idea in a number of talks describing this as a trap. Martin Fowler demonstrates this brilliantly with his “Design Stamina Hypothesis.”

So, where am I going with this, and what is the theme behind this series? I am going to start with a discussion of what I see as a key contributor to re-work, missed schedules, and poor product fit, and then I will follow up with a couple of processes/techniques we emphasize at Don’t Panic Labs that are specifically designed to mitigate these risks before we start putting fingers to the keyboard and begin coding.

The Danger of Incomplete Pictures

Software development is a team sport with many people, disciplines, perspective, skill sets, and communication styles represented. When things go wrong, it is often the result of a key problem we see within these multi-disciplined software projects. People might assume this problem is a lack of requirements specificity. I agree that this is a problem, but I feel this is more symptomatic as opposed to causal.

I have come to view the lack of requirements specificity to be the result of a lack of recognition. It is incredibly challenging to gain a shared picture of the requirements. We often assume that we have a shared understanding when, in fact, we do not.

Software design of complex systems (aka the type of systems many of us work on) is, by its very nature, a wicked problem:

“A wicked problem is a problem that is difficult or impossible to solve because of incomplete, contradictory, and changing requirements that are often difficult to recognize.”

I am sure we have all experienced this phenomenon in our work. As a side note, one of the challenges with the way we are educating software engineers is that many of the assignments in education are not wicked problems. But I digress.

In my experience, we as engineers are not being as effective as we can in minimizing requirements that are “difficult to recognize.” Some of this is a result of human nature and the challenges with communication. Let me explain.

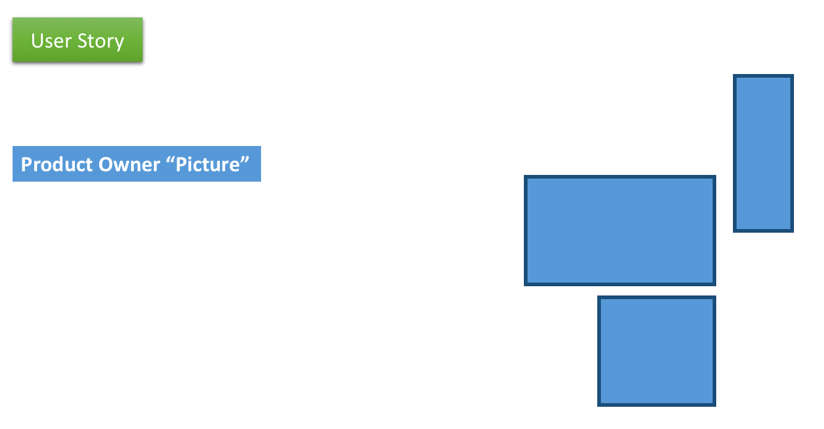

Imagine you are a software engineer who is responsible for implementing a user story. You meet with the product owner and she explains the concept and requirements behind the user story. In her mind, what she described is this:

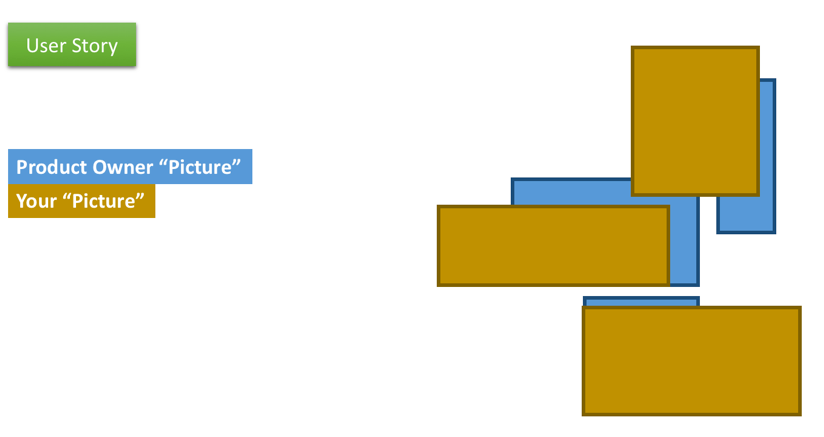

The problem is, you took what you heard and created a mental model that looks like this:

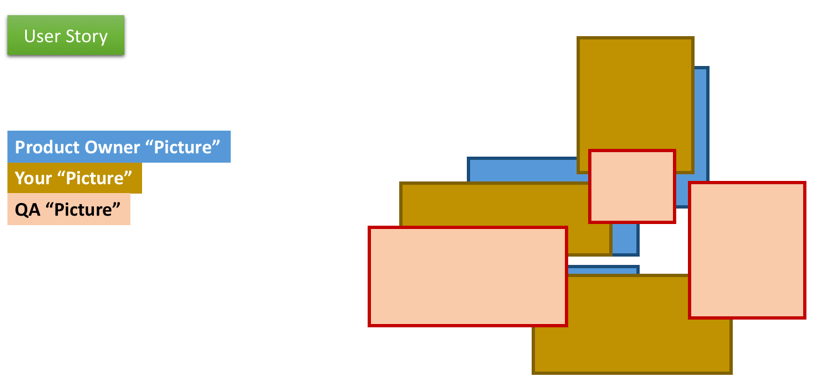

What’s more, when you described this to your QA engineers, they created a mental model that looks like this:

We need to keep in mind that even if we all shared the same picture from these conversations, it is highly unlikely our aggregate picture is complete:

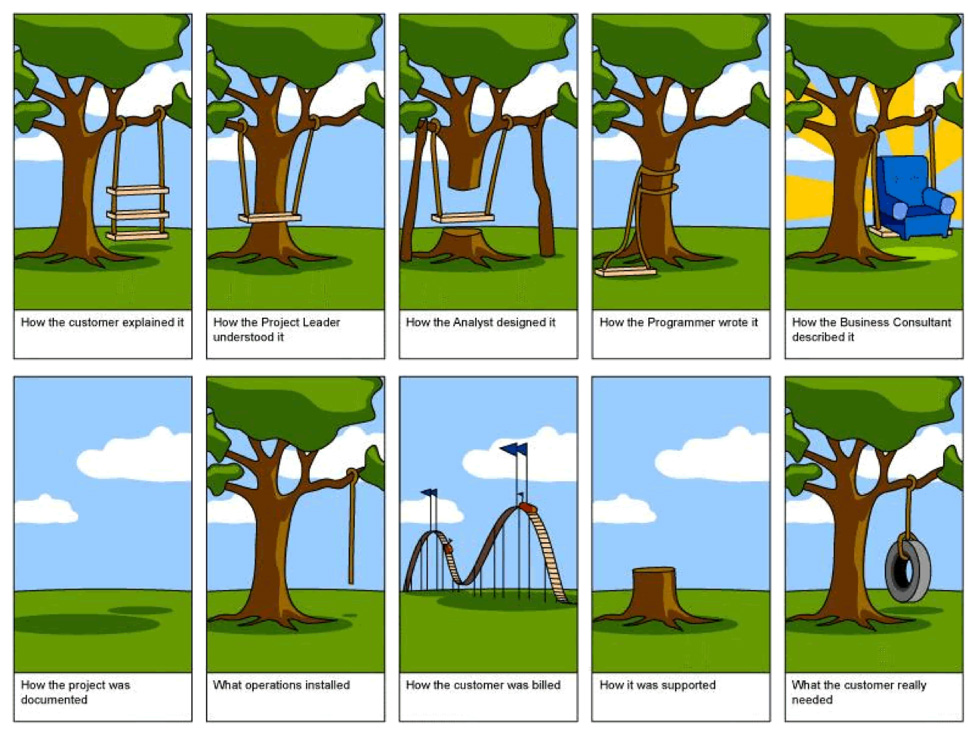

You have probably seen this demonstrated via the following cartoon:

There are two key problems that this presents to us. I have described these as “blind spots” in our thinking:

- Our assumption that we all have a shared picture

- Our assumption that all assumptions and requirements are known

My next two posts in this series will introduce a couple of tools/techniques we have put to use inside Don’t Panic Labs that have made a significant impact on reducing the occurrence of “difficult to recognize” requirements, making the solutions to our projects and problems less “wicked.”

This post was originally published at dougdurham.com.